This feature lets users define regions in an image for recognition. The regions are determined manually by using a mouse and drawing a box over an image region (similar to cropping). This image region is stored by a name (user-defined) with its height, width, and XY coordinates. The image regions are converted to text using the OCR workflow step clsAzureMLGetText.

You'll need to navigate to the Status - Status page.

Select Settings - Image regions menu option.

The Image Regions UI is rendered below.

Create Image Regions.

Select Actions - Create a menu option.

A popup is rendered for configuration. You must provide a name and choose an image file from the local folder. Click the Create button to confirm.

The image is uploaded with the region name, and a confirmation message is displayed in the top right-end corner.

The image below is used in the example here.

Select the region and click the Actions - Edit menu option to insert actual image regions.

The Manage Image Regions UI is rendered below. Use the mouse to draw a square over the image.

You must double-click on the square to name this image region. Then, click the Save button to confirm.

Using the above approach, you may create multiple image regions. A list of all the image regions is rendered as a table at the bottom of the page. You may select the image region to alter the space and click the Update Regions button to confirm. You may click the Clear Regions button to permanently remove all the image regions.

Use Image Regions in the process.

- Create a new process definition using the clsAzureMLGetText workflow step, as below.

- Click the clsTessearctGetText step to configure the “Settings” properties. You must provide the step name and the image file path on the app server. Provide the variable/global reference to store the result text. Provide the variable/global reference to store the Azure ML percentage accuracy. Click the Save button.

- Click the clsTessearctGetText step to configure the “Optional” properties. Select the image from the drop-down list to fetch the image regions. Click the Save button.

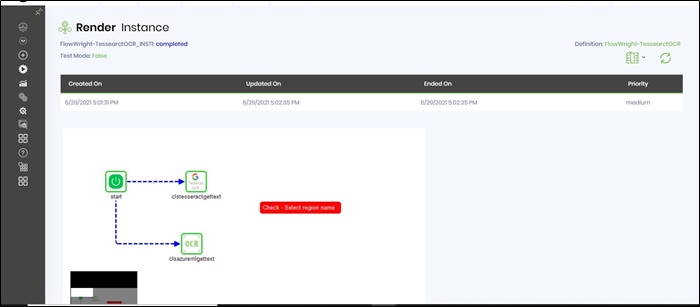

- Save the process definition. Generate and Execute a new process instance. Render the process instance and render to view the step properties. The image regions are converted to text using the OCR approach.